Stairway to Success:An Online Floor-Aware Zero-Shot Object-Goal Navigation Framework via LLM-Driven Coarse-to-Fine Exploration

Stairway to Success:An Online Floor-Aware Zero-Shot Object-Goal Navigation Framework via LLM-Driven Coarse-to-Fine Exploration

Testing our method in real-world environments.

Multi-floor and single-floor navigation with open-vocabulary target objects.

Deployable service and delivery robots struggle to navigate multi-floor buildings to reach object goals, as existing systems fail due to single-floor assumptions and requirements for offline, globally consistent maps. Multi-floor environments pose unique challenges including cross-floor transitions and vertical spatial reasoning, especially navigating unknown buildings. Object-Goal Navigation benchmarks like HM3D and MP3D also capture this multi-floor reality, yet current methods lack support for online, floor-aware navigation. To bridge this gap, we propose ASCENT, an online framework for Zero-Shot Object-Goal Navigation that enables robots to operate without pre-built maps or retraining on new object categories. It introduces: (1) a Multi-Floor Abstraction module that dynamically constructs hierarchical representations with stair-aware obstacle mapping and cross-floor topology modeling, and (2) a Coarse-to-Fine Reasoning module that combines frontier ranking with LLM-driven contextual analysis for multi-floor navigation decisions. We evaluate on HM3D and MP3D benchmarks, outperforming state-of-the-art zero-shot approaches, and demonstrate real-world deployment on a quadruped robot.

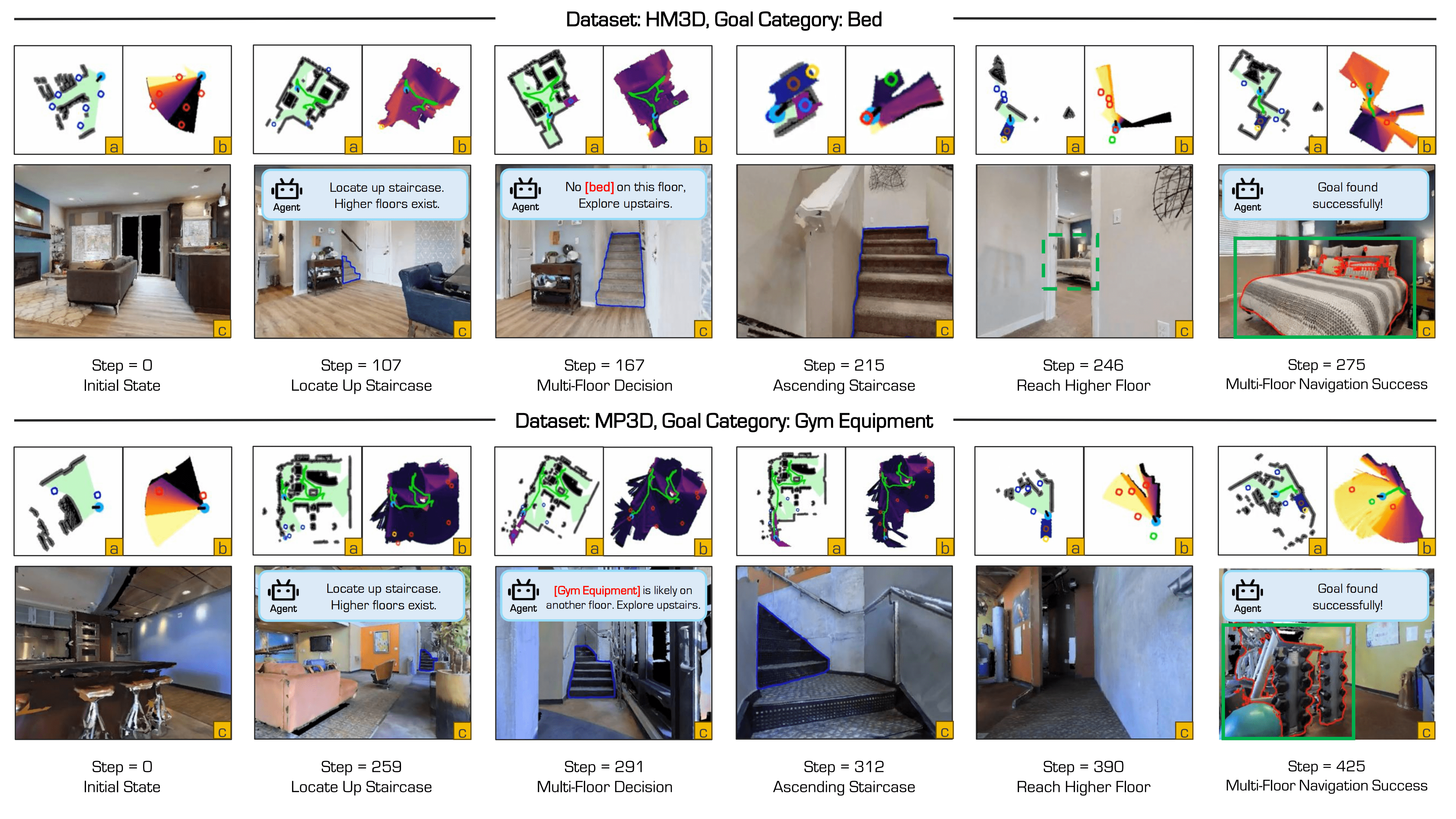

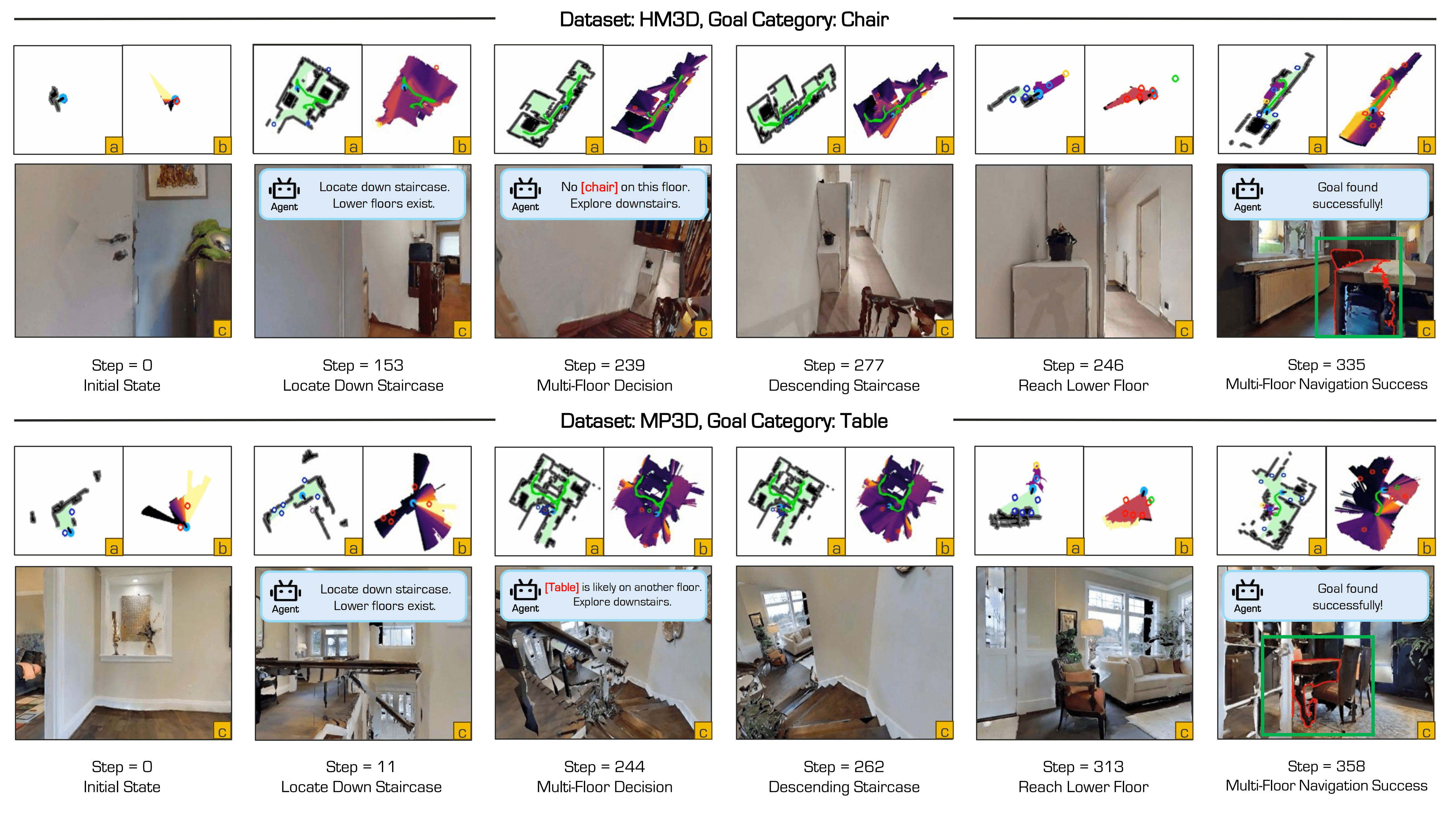

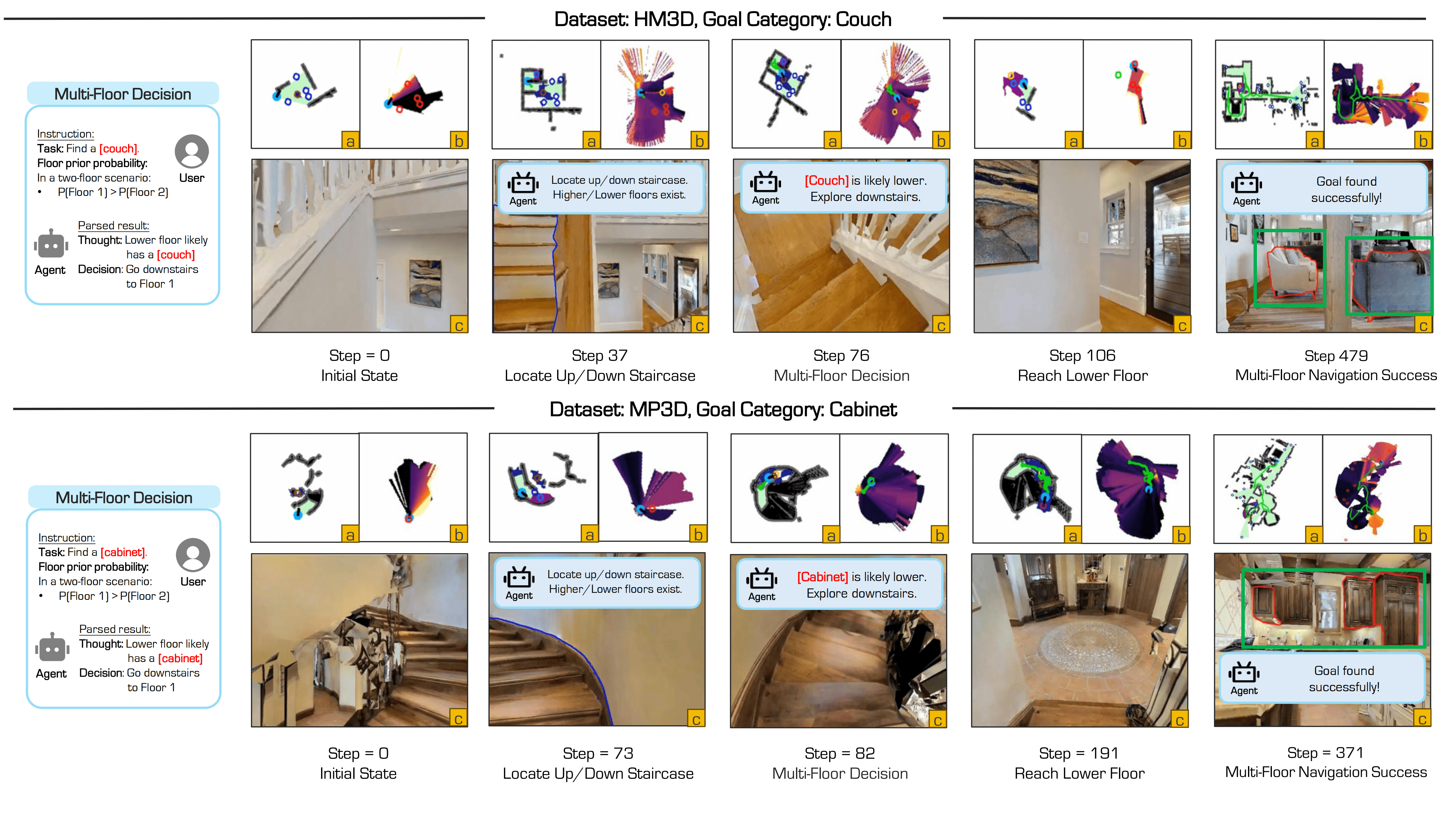

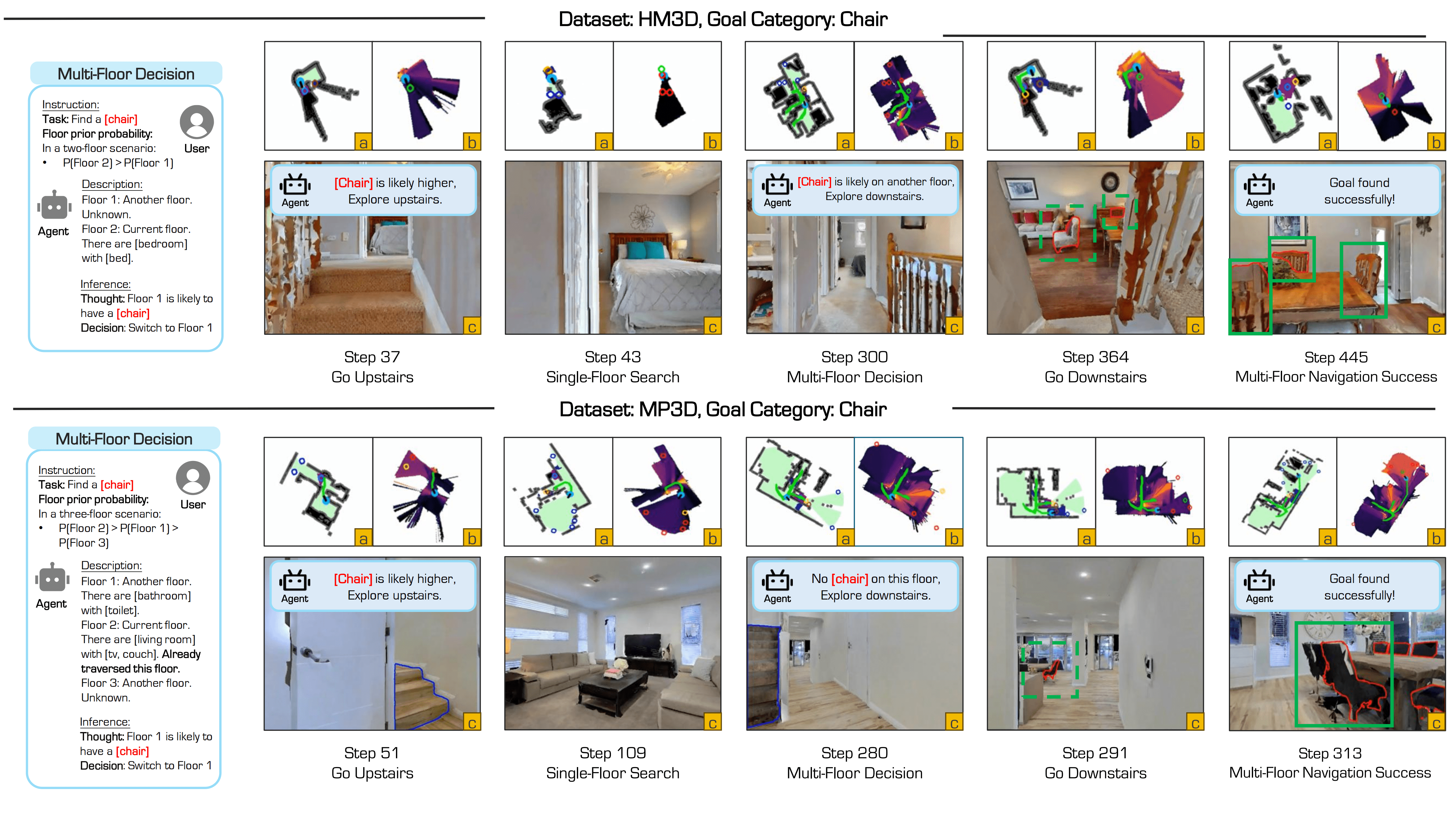

Fig: Motivation of ASCENT. Unlike prior approaches that fail in multi-floor scenarios, our method enables online multi-floor navigation. By reasoning across floors, our policy succeeds in locating the goal and demonstrates a meaningful step forward in Zero-Shot Object-Goal Navigation.

Fig: Framework overview of ASCENT. The system takes RGB-D inputs (top-left), and outputs navigation actions (bottom-right). The Multi-Floor Abstraction module (top) builds intra-floor BEV maps and models inter-floor connectivity. The Coarse-to-Fine Reasoning module (bottom) uses the LLM for contextual reasoning across floors. Therefore, ASCENT achieves floor-aware, Zero-Shot Object-Goal Navigation.

HM3D and MP3D datasets. Metrics: SR (Success Rate) / SPL (Success weighted by Path Length).

| Setting | Method | Venue | Vision | Language | HM3D SR |

HM3D SPL |

MP3D SR |

MP3D SPL |

|---|---|---|---|---|---|---|---|---|

| Setting: Learning-Based | ||||||||

| Single-Floor | SemExp | NeurIPS'20 | - | - | 37.9 | 18.8 | 36.0 | 14.4 |

| Aux | ICCV'21 | - | - | - | - | 30.3 | 10.8 | |

| PONI | CVPR'22 | - | - | - | - | 31.8 | 12.1 | |

| Habitat-Web | CVPR'22 | - | - | 41.5 | 16.0 | 35.4 | 10.2 | |

| RIM | IROS'23 | - | - | 57.8 | 27.2 | 50.3 | 17.0 | |

| Multi-Floor | PIRLNav | CVPR'23 | - | - | 64.1 | 27.1 | - | - |

| XGX | ICRA'24 | - | - | 72.9 | 35.7 | - | - | |

| Setting: Zero-Shot | ||||||||

| Single-Floor | ZSON | NeurIPS'22 | CLIP | - | 25.5 | 12.6 | 15.3 | 4.8 |

| L3MVN | IROS'23 | - |  GPT-2 GPT-2 | 50.4 | 23.1 | 34.9 | 14.5 | |

| SemUtil | RSS'23 | - | BERT | 54.0 | 24.9 | - | - | |

| CoW | CVPR'23 | CLIP | - | 32.0 | 18.1 | - | - | |

| ESC | ICML'23 | - |  GPT-3.5 GPT-3.5 | 39.2 | 22.3 | 28.7 | 14.2 | |

| PSL | ECCV'24 | CLIP | - | 42.4 | 19.2 | - | - | |

| VoroNav | ICML'24 | BLIP |  GPT-3.5 GPT-3.5 | 42.0 | 26.0 | - | - | |

| PixNav | ICRA'24 | LLaMA-Adapter |  GPT-4 GPT-4 | 37.9 | 20.5 | - | - | |

| Trihelper | IROS'24 |  Qwen-VLChat-Int4 Qwen-VLChat-Int4 |  GPT-2 GPT-2 | 56.5 | 25.3 | - | - | |

| VLFM | ICRA'24 | BLIP-2 | - | 52.5 | 30.4 | 36.4 | 17.5 | |

| GAMap | NeurIPS'24 | CLIP |  GPT-4V GPT-4V | 53.1 | 26.0 | - | - | |

| SG-Nav | NeurIPS'24 | LLaVA |  GPT-4 GPT-4 | 54.0 | 24.9 | 40.2 | 16.0 | |

| InstructNav | CoRL'24 | - |  GPT-4V GPT-4V | 58.0 | 20.9 | - | - | |

| UniGoal | CVPR'25 | LLaVA | LLaMA-2 | 54.0 | 24.9 | 41.0 | 16.4 | |

| Multi-Floor | MFNP | ICRA'25 |  Qwen-VLChat Qwen-VLChat |  Qwen2-7B Qwen2-7B | 58.3 | 26.7 | 41.1 | 15.4 |

| Ours | – | BLIP-2 |  Qwen2.5-7B Qwen2.5-7B |

65.4 | 33.5 | 44.5 | 15.5 | |

Tab: Quantitative Results on the OGN Task. This table presents quantitative comparisons of the Object-Goal Navigation task on the HM3D and MP3D datasets. It contrasts supervised and zero-shot methods across the metrics of Success Rate (SR) and Success Weighted by Path Length (SPL), highlighting the state-of-the-art performance of our approach in open-vocabulary and multi-floor navigation scenarios.